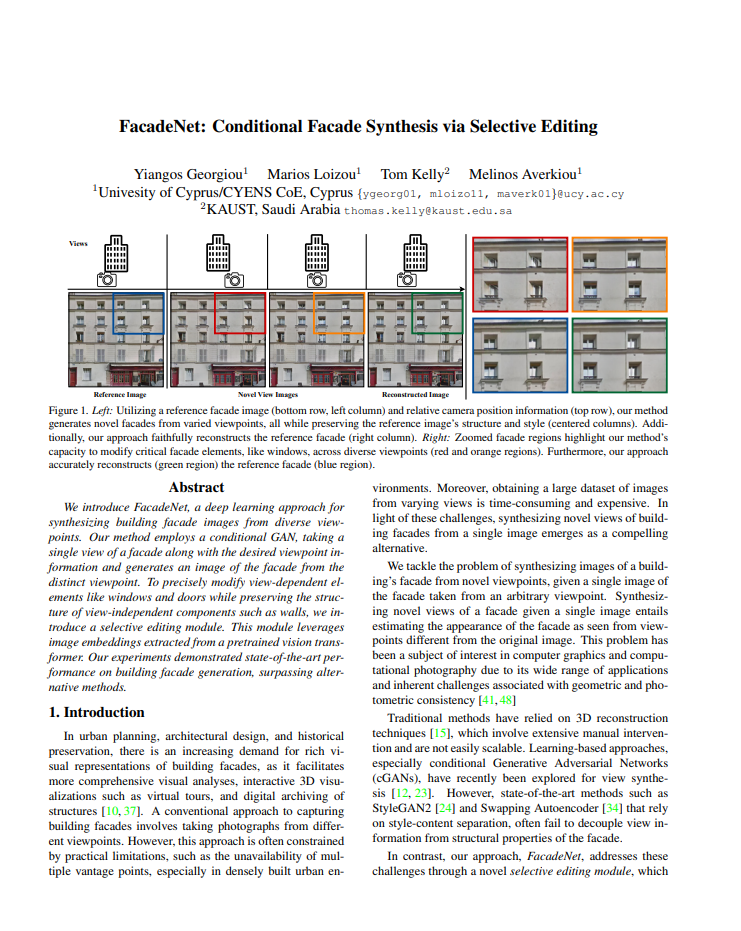

FacadeNet: Conditional Facade Synthesis via Selective Editing

Abstract

We introduce FacadeNet, a deep learning approach for synthesizing building facade images from diverse view-points. Our method employs a conditional GAN, taking a single view of a facade along with the desired viewpoint information and generates an image of the facade from the distinct viewpoint. To precisely modify view-dependent elements like windows and doors while preserving the structure of view-independent components such as walls, we introduce a selective editing module. This module leverages image embeddings extracted from a pretrained vision transformer. Our experiments demonstrated state-of-the-art performance on building facade generation, surpassing alternative methods.

Qualitative Results

View Interpolation

Examples of 5-step image interpolation on the horizontal axis. Given the reference images (left column), we can reconstruct the novel view from different angles as it is illustrated in the images of columns 2-6.

Problematic View Improvment

We display examples of problematic facade image improvement. We display pairs of the reference images (left) and the θ 0 (center image) reconstruction images(right). We observe that our model can rotate the facade to a better view orientation in contrast to the reference while at the same time, it achieves a high similarity of style and structure.

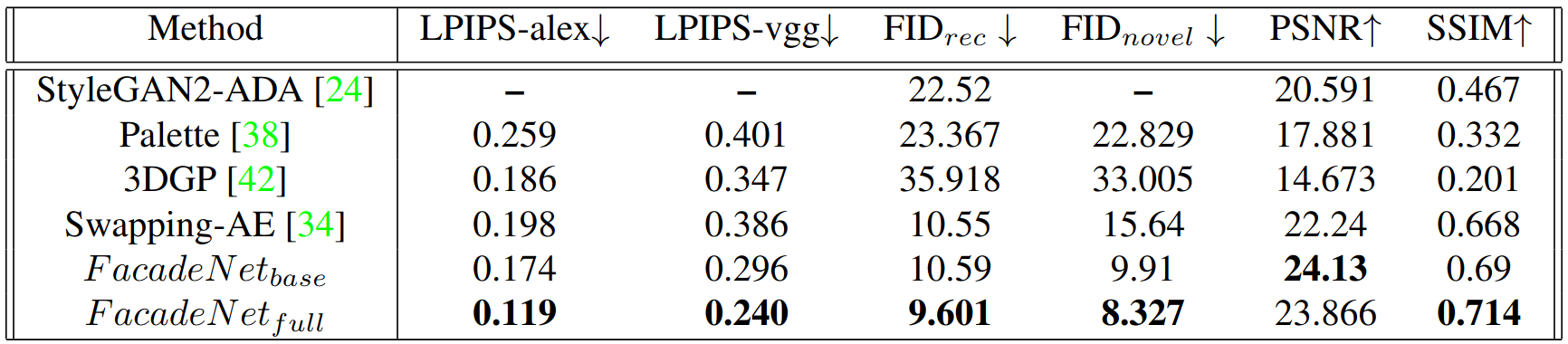

Evaluation

This table presents a comprehensive comparison between our model baseline FacadeNet base, StyleGAN2, Palette, 3DGP, swapping-autoencoder and FacadeNet. The results clearly demonstrate the superiority of our task-specific model across various evaluation criteria, including reconstruction quality, novel view synthesis quality, and consistency. To assess the reconstruction image quality, we employ FIDrec, PSNR, and SSIM metrics. Regarding novel view image quality we rely on FIDnovel, while we measure the inter-view consistency with LPIPS−{alex, vgg} metrics. Our final model FacadeNet outperforms previous approaches by a significant margin.